This project explores the use of a local chatbot trained on a limited set of personal documents to test the capabilities of lightweight language models. Among the models evaluated, Llama 4 Scout Instruct 17B proved to be the most efficient, offering both speed and coherence despite the restricted input data. A key factor in its performance is the Mixture of Experts (MoE) architecture, which selectively activates specialized sub-networks to improve efficiency and scalability. While the model still exhibits hallucinations—an expected limitation at this parameter scale—the experiment highlights the potential of local models to deliver fast, personalized applications without relying on massive infrastructure.

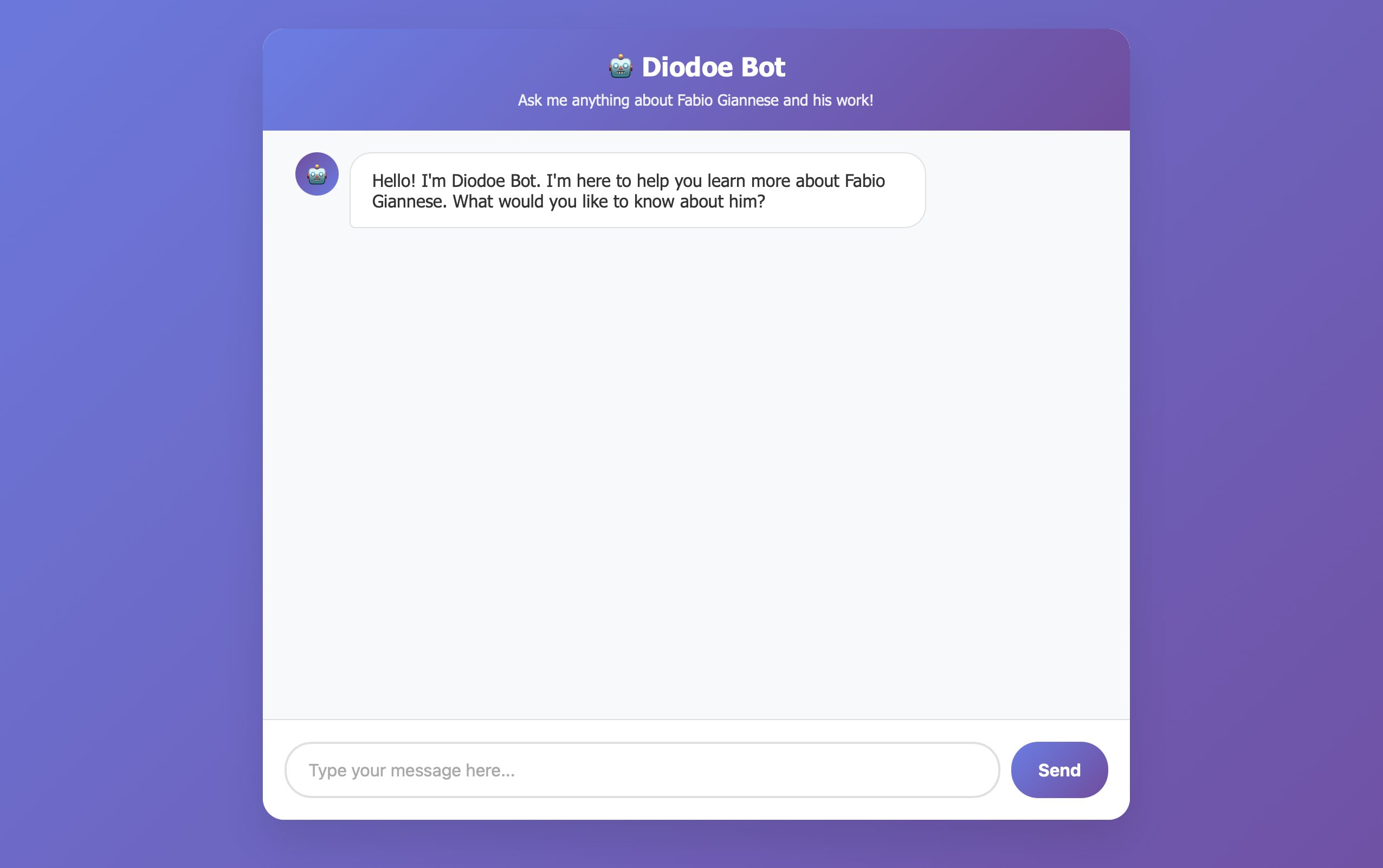

Over the past few weeks, I’ve been experimenting with something a little different: I built a chatbot that answers questions about me, my work, and my career. Not because I think the world desperately needs a “me-bot,” but mainly as a playground to explore how local language models behave when you feed them only a handful of personal documents.

What I found particularly interesting is how Llama 4 Scout Instruct with 17B parameters performs. Among the models I tested, it struck the right balance between speed and consistency. It manages to stay coherent even when it has access to very limited context, which makes it surprisingly useful for small-scale personal projects like this one.

A big part of that performance boost comes from the Mixture of Experts (MoE) architecture. Instead of activating the entire model for every request, MoE routes the input through only a subset of specialized “experts” inside the network. In practice, this means the model can scale up in size without requiring all the computational overhead at every step, and it often leads to faster, more efficient inference. The recent MoE papers suggest that this approach allows smaller models to punch above their weight, especially in scenarios where speed and efficiency matter.

Of course, the technology is still far from perfect. On models of this size, hallucinations are hard to avoid—so if you do try the bot, don’t take everything it says as gospel truth. Think of it more as an exploratory experiment, one that sits somewhere between personal archive and AI lab test.

For me, the real value has been less about the “answers” it gives, and more about understanding how these architectures behave in practice. Even a playful project like this offers a glimpse of where local models are heading—and how they might soon become powerful enough to handle useful, highly personalized tasks without needing massive infrastructure behind them.

Leave a Reply